Virtual Memory Basics: Why Look at PAGEFILE.SYS?

Introduction

Memory, or storage, is essential to the operation of a computer. There are different kinds of memory as well, separated into two categories:

- Primary Storage: This is short-term, high-speed, low-capacity memory. Motherboard RAM and the different types of cache contained inside and outside the CPU are used here to store instructions and data.

- Secondary Storage: This is long-term, low-speed, high-capacity storage. Hard disk, CD, and DVD media fall into this category.

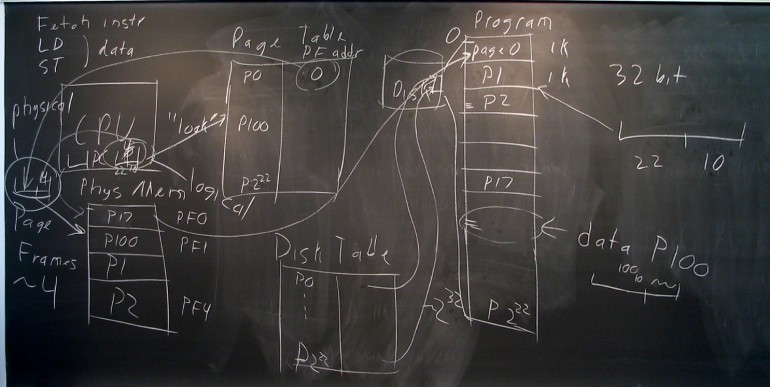

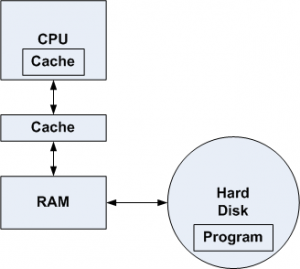

Figure 1 illustrates the flow of instructions and data in a computer. For the CPU to execute a program, the instructions and data that make up the program must be copied from the hard disk into RAM. The CPU only fetches instructions and data from RAM (or cache), never directly from the hard disk.

Once a program (or a portion of it) has been copied into RAM, the CPU will begin fetching instructions and data from the memory locations the program was copied into. The CPU will always look inside its internal cache first, to see if the instruction or data it needs is stored there. A cache hit means the instruction or data has been found in the cache. A cache miss means the instruction or data is not stored in the cache.

If there is a miss in the internal cache, the CPU then looks in the external cache. A miss there means the CPU has to load the instruction or data from RAM. This takes the longest amount of access time, because the cache access time is a lot shorter than the access time of RAM due to its internal architecture.

Figure 1: The flow of instructions and data in a computer

Data from RAM is then also written into the cache during a miss so that future accesses to the same data will result in hits and better memory performance.

Modern operating systems, such as Windows and Linux, support multitasking. This allows multiple programs to run concurrently (but not simultaneously) on one CPU. Concurrent execution of multiple programs means that, over a period of time, all programs have experienced execution time on the CPU. This is accomplished by running one program for a short period of time (called a time slice), then saving all CPU registers, reloading the CPU registers for the next program that will run, and resuming execution of the next program. This way, each program that is “running” gets multiple slices of time every second to execute.

To a human being, it appears that all programs are running simultaneously, even though that is impossible with a single CPU.

However, simultaneous execution is possible when the single CPU has multiple cores, or there are multiple CPUs in the system. Then you have the case where two or more programs really can run at the same time, each on its own CPU or core.

With multiple processes running, the RAM allocated to each program will add up and possibly exceed the amount of physical RAM installed on the system. For example, there is 2 GB of RAM, but the number of programs running require 2.4 GB of RAM. Where does the extra 400 MB of RAM come from? The system “borrows” it from the hard disk. This is the essence of virtual memory. The total amount of memory available to the operating system for running programs equals the amount of RAM plus the amount of “paging” storage available on the hard disk.

Demand-Paging Virtual Memory

Operating systems utilize a demand-paging virtual memory system in order to make efficient use of RAM and support concurrent execution of multiple programs in RAM at the same time. In a paged memory management system, RAM is divided into fixed-size blocks, typically 4 KB in size, called pages. One or more pages is allocated to a program when it is copied into RAM for execution. Note that the entire program does not have to be copied into RAM for it to begin execution. The reason for this will be explained shortly.

The pages allocated to a program do not have to all be in the same area of RAM. Much like file fragmentation on a disk, program pages may be fragmented as well, and spread out across the RAM memory space. Special registers and tables in the protected-mode architecture of the Intel 80×86 CPU contain pointers to allocated pages so that they may be properly accessed when the CPU is fetching an instruction or data from the required page.

Now, consider a program that provides a menu of options when it starts up. Once a user selects a particular menu option, a different section of the program code is executed. This is an example of why it is not necessary to load the entire program into memory at the beginning of its execution. Perhaps only the portion of the program that contains the menu code is loaded into one or more pages. Then, when a user chooses an option, the pages for the program code that implement that option are loaded. Protected mode provides a way of determining if the instruction or data being fetched by the CPU is present in a page or not. If it is not present, a page fault is generated that causes the operating system to load the necessary program code into a new page. This of course results in a small loss of performance, as the CPU must now wait until the information has been transferred from the hard disk into a RAM page.

The benefit here is that a page is not loaded into RAM until it is needed. This is the essence of demand paging. When there is a demand for the page, it is allocated and loaded.

But if we just continue allocating and loading pages into RAM, eventually we will run out of free pages. When this happens, we can not have the computer grind to a halt, or give an error because there is not enough RAM to start a program or continue execution of a program. Instead, when a page fault occurs and there are no free pages, a victim page must be chosen to be overwritten by new information from the hard disk. Ideally, a victim page that is never needed again is chosen, but this is not a requirement.

The victim page may need to be written back to the hard disk before it RAM page is overwritten with new data. The protected mode architecture keeps track of the status of each page and knows if a page is “dirty,” meaning that it has been modified since being loaded into RAM. A dirty page must be written back to the hard disk. If the page is not dirty, it can just be overwritten with new data.

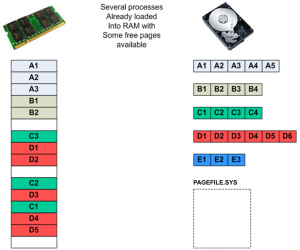

Let us take a visual look at this process.

Figure 2(a) shows a number of running processes (another term for program) already having pages loaded into RAM. There are a few free pages in RAM still remaining to be allocated as needed. The operating system has not had to use PAGEFILE.SYS yet. PAGEFILE.SYS is the portion of hard disk storage allocated for storage of dirty pages in a Windows environment.

Figure 2(a): Initial state of RAM with multiple processes running

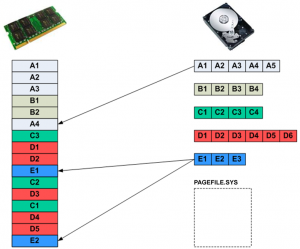

In Figure 2(b), pages A4, E1, and E2 have been loaded into RAM. There are now no free pages in RAM left to allocate.

Figure 2(b): New pages have been loaded into RAM

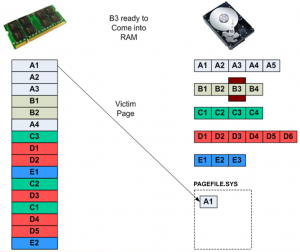

In Figure 2(c) page B3 needs to come into RAM, but since there are no free pages available, a victim page must be chosen. The page replacement algorithm chooses page A1 as the victim page. A page replacement algorithm is responsible for keeping track of allocated RAM pages and choosing a victim based on a predetermined metric, such as how long a page has been resident in RAM, how many times it has been accessed, or how long it has been since its last access. The operating system determines that page A1 is dirty and must be written back to disk, so it is written to PAGEFILE.SYS.

Figure 2(c): A victim page needs to be chosen

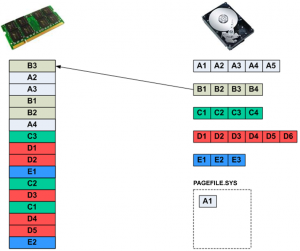

Once page A1 has been freed up, page B3 can be loaded into RAM, as shown in Figure 2(d).

Figure 2(d): A new page is loaded into RAM

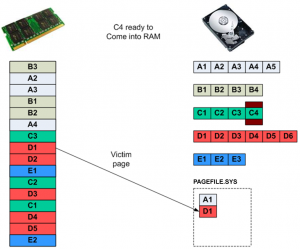

In Figure 2(e) page C4 needs to be loaded into RAM, but there are still no free pages, and page D1 is chosen as a victim and, also being dirty, is written back to PAGEFILE.SYS.

Figure 2(e): Another victim page must be chosen

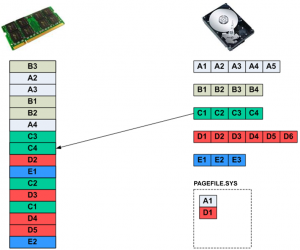

This allows page C4 to be loaded into RAM, as shown in Figure 2(f).

Figure 2(f): Another new page is loaded into RAM

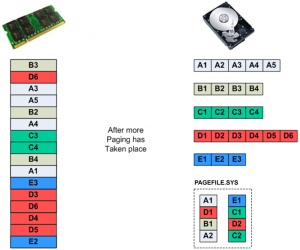

As this paging scenario continues to play out, processes keep needing new pages loaded into RAM, victims continue being swapped back to PAGEFILE.SYS, and after a while we see the state of the system as shown in Figure 2(g).

Figure 2(g): RAM contents and PAGEFILE.SYS contents after multiple page swaps

It is these “dirty” pages stored in PAGEFILE.SYS that are potential sources of good forensic evidence during an investigation. PAGEFILE.SYS is a protected and hidden operating system file, but is easily accessible to forensic software. The size of PAGEFILE.SYS is typically at least as large as the amount of motherboard RAM. Figure 3(a) shows the current size of the paging file. This can be made larger or smaller by the user by clicking the Change button, which brings up the Virtual Memory paging file size adjustment window, shown in Figure 3(b).

Figure 3(a): Advanced tab in Performance Options shows the Virtual memory paging file size

Figure 3(b): Virtual Memory paging file size window

In terms of performance, the larger the page file the better, and the more RAM the better. If there is not enough RAM to adequately support the number of running processes, the operating system can get into a low-performance state called thrashing, where there are almost continuous page faults, plenty of swapping going on, but not much execution of processes as so much time is being devoted to swapping.

PAGEFILE.SYS Solves the Case !

So, why does a forensic investigator need to care about PAGEFILE.SYS? Here is an actual example of a forensic investigation in a case that was solved by evidence located in PAGEFILE.SYS. The main goal of the case was to determine if a computer accessed a particular file over the network. The forensic investigation did not show any references to the file in the command line history, or in recent documents, or in deleted files, nor was the file stored anywhere on the disk partition.

The PAGEFILE.SYS file was processed by a strings program to extract all printable character strings. When the output file was searched, a network path to the file being searched for was present. This means that the same file path was once resident in a RAM page, and that RAM page was chosen as a victim and written back to PAGEFILE.SYS. If the file path was resident in a RAM page, that indicates the file was accessed in some way by the system, which is the proof being sought in the investigation.

Conclusion

The evidentiary value of PAGEFILE.SYS has hopefully been demonstrated here. In the same fashion, the Windows HIBERFIL.SYS file can also be a forensic gold mine, as it contains a complete copy of RAM contents at the time a computer was put into hibernation.

For Linux investigations, the equivalent to PAGEFILE.SYS is the swap file, where RAM pages are stored when they are replaced.

The more a forensic investigator understands about the details of how an operating system functions the better. Knowing the details of how virtual memory is implemented gives the investigator another source of possible evidence that may be important to a case.

About The Author

James L. Antonakos is a SUNY Distinguished Teaching Professor of Computer Science at Broome Community College, in Binghamton, NY, where he has taught since 1984. James teaches both in the classroom and online in classes covering electricity and electronics, computer networking, computer security and forensics, information management, and computer graphics and simulation. James is the designer and director of the new 2-year AAS Degree in Computer Security and Forensics at Broome Community College. James is also an IT security consultant for Excelsior College and an online instructor for Champlain College and Excelsior College. James has extensive industrial work experience as well in electronic manufacturing for both commercial and military products, particularly in flight control computer technology for Navy aircraft. James also consults with many local companies in the areas of computer networking and information security. James is the author or co-author of over 40 books on computers, networking, electronics, and technology. He is also A+, Network+, and Security+ certified by CompTIA and ACE certified in computer forensics by AccessData. James is a frequent presenter at the annual New York State Cyber Security Conference, the founder of WhiteHat Forensics, and an NCI Fellow for the National Cybersecurity Institute in Washington, DC.